Introduction

Listening to a student read orally provides direct information about that student’s academic progress. Immediately, the listener has evidence of the student’s progress toward acquiring an academic skill that is expected to be learned in the first few years of formal schooling. The listener can then use that information to adjust instruction as needed.

The Early Grade Reading Assessment (EGRA)—a direct, individual-student-level assessment that includes oral reading—generates such information. The assessment is a collection of 15 subtasks (Dubeck & Gove, 2015), of which five were used for the current study. The EGRA has been used in over 75 low- and middle-income countries in 125 languages. It has been applied in longitudinal research funded by foundations (Jukes et al., 2017) and in numerous donor-funded development projects, for monitoring and evaluation.

Generally, the EGRA is used to measure lower primary grade students’ progress toward learning to read. (For more on the EGRA’s purpose, see Dubeck et al., 2016; Dubeck & Gove, 2015). One commonly reported EGRA result is the average number of words from a grade-appropriate passage read aloud accurately in 1 minute and students’ comprehension of what they read. Examining these results illustrates how reading skills develop: readers first develop word identification skills, and with more practice, those skills become automatic before readers’ attention shifts to comprehension (Chall, 1983). The ability to read a grade-appropriate passage orally and answer related questions is an authentic expectation in the lower primary grades, but measuring just one skill results in a limited view of students’ reading ability. The current study presents an alternative methodology for using the EGRA results; the methodology was first applied and described in Stern et al. (2018).

Large-scale donors such as the United States Agency of International Development (USAID) use EGRA oral passage-reading results to understand the effectiveness of reading instruction that the donors support. Using only the oral passage reading results to measure student progress, however, overlooks students’ acquisition of the measurable skills that precede oral passage reading. For example, even before learners interact with print, they develop oral language skills such as listening comprehension and phonological awareness. Students then use phonological awareness as they learn the symbols that represent sounds—i.e., they discover how to decode symbols into words. As students’ reading skills progress, they apply knowledge of those sound–symbol relationships more accurately and eventually automatically, allowing them to interact with increasingly more complex texts. Ultimately, the goal is for students to read grade-level texts independently with fluency and understanding (i.e., comprehension).

The progression is presumed to be similar for all new readers; yet, even with effective instruction, students learn these skills at different rates: assessment results from a variety of contexts in a variety of languages have produced evidence that individual students learn specific skills at slower or faster rates than their classmates. Some children learn to read without formal instruction, whereas children at the opposite extreme require intensive individualized instruction to reach the same point (Boscardin et al., 2008; Caravolas et al., 2013; Georgiou et al., 2008; Vellutino et al., 1996). In the middle are the majority of children who learn reading skills in a predictable sequence, moving from oral knowledge to print knowledge at varying rates. Classroom instruction needs to respond to these different needs to ensure that students develop all of the foundational skills for reading success.

Differentiated literacy instruction—that is, instruction targeted to individual needs—is beneficial in the early stages of reading development when students need to learn symbols (e.g., letters) and the sounds they represent. Providing systematic and explicit instruction helps to prevent reading difficulties, and when the instruction is targeted to students’ instructional needs, they will show growth (Piper et al., 2018; Vellutino et al., 1996). This paper focuses on how reading assessment results from the EGRA can help inform literacy instruction in classrooms by identifying foundational skills that are lacking, allowing teachers to instruct their students in those skills.

Purpose

As mentioned, the EGRA oral passage reading subtask is typically administered alongside other reading-related subtasks to help diagnose why children may not be meeting grade-level expectations on oral reading fluency. Results on these additional subtasks can be used in conjunction with the oral passage reading results to understand instructional growth and needs. For the current study, we applied a learner-profile method developed using a national data set of Grade 2 Indonesian students (Stern et al., 2018) to data from Ugandan students.

Developing learner profiles begins with examining the results of the EGRA oral passage reading subtask. Then the learner profiles are cross-referenced with results on other EGRA subtasks to show what skills need to be developed to progress from one learner profile to the next. Ultimately, the profiles can guide classroom instruction to meet the reading needs of students so that those who lack a particular reading skill receive appropriate practice and instruction regardless of grade level. Furthermore, they help to shift the interpretation of results from a deficit perspective, meaning what students cannot do on one subtask, to a recognition of their abilities.

We created a set of learner profiles from archival data from Grade 2 students learning to read in Bahasa Indonesia (Stern et al., 2018), which was a first language (L1) for roughly half of the students in the sample. Those data yielded five distinct learning profiles (see Table 1). We found that Indonesian classrooms were heterogeneous, with 70 percent of classrooms having three or four profiles and nearly 20 percent of classrooms having all five profiles (Stern et al., 2018). The current study replicated the methods used in the Indonesian analysis with data from Uganda, a context where most of the students attending government schools learn in a local language.

Besides our interest in the efficacy of the learner profile methodology for students learning to read in a local language, we were also interested to learn whether the method would show differences by language within a similar context. By examining the Ugandan data, which included assessments of students from various language groups who had some overlapping contextual factors (e.g., a common instructional program), we could do that and explore the replicability of the method.

Research Question 1. Does the learner-profile methodology used in Bahasa Indonesia distinguish group membership in two Bantu languages or a Nilotic language?

Research Question 2. Does the learner-profile methodology measure change in learner profiles between two time points, from Grade 1 to Grade 4, in two Bantu languages or a Nilotic language? At a single time point, we unsurprisingly found heterogeneous profiles within the Indonesian sample as it is a well-documented phenomenon that children’s reading skills progress faster than others (Boscardin et al., 2008; Caravolas et al., 2013; Georgiou et al., 2008; Vellutino et al., 1996). Our Ugandan data from surveys that were administered 3 years apart with cohorts of students in Grade 1 and then Grade 4 allowed us to determine whether we could extend the methodology to show profile change over time.

Research Question 3. Does the distribution of learner profiles in classrooms change from Time 1 (a 2013 baseline with Grade 1 students) to Time 2 (a 2016 endline with Grade 4 students)? One intention of the learner profile methodology is to help to guide instruction so that children are not bored by content that is too easy for them or frustrated by content that is too difficult. Therefore, we wanted to see if changes in the distribution of profiles could help guide instruction. The data used to answer this question came from cohorts of children at two time points who were attending the same schools. Because our design used cohort samples and not longitudinal results, we were interested in whether and how the proportion of students in each profile would change over time. If the methodology indicated changes in the learner-profile distribution, it could be an alternative to passage reading to show reading progress.

Methodology

For this study, we analyzed an archival data set from USAID’s 5-year education project in Uganda, the School Health and Reading Program (SHRP). The project aimed to improve early-grade reading skills for students in Grades 1 to 4 who were learning to read in the language of their local community (for more on SHRP, see Brunette et al., 2019).

Participants

The students whose data we analyzed attended Ugandan government schools in districts implementing SHRP in one of three program languages: Ganda, Lango, or Runyankore-Rukiga. Using a stratified design, we randomly sampled between 56 and 61 schools in each district, after which we randomly selected 20–30 students per school (stratifying by gender to evenly split between boys and girls). We examined results by cohort at two time points: Time 1, a 2013 baseline with Grade 1 students; and Time 2, a 2016 endline with Grade 4 students (Ganda n = 2,820; Lango n = 3,237; Runyankore-Rukiga n = 2,767). Our design was cohort-based, not longitudinal. In other words, we randomly sampled students from Grade 1 in 2013 and again from Grade 4 in 2016, as opposed to tracking individual students over time. We examined changes in demographic factors to determine the level of consistency across characteristics for the two grade-level samples (for the limited factors available in our data). Generally, we found the following trends: the Grade 4 cohort was more likely to report having mobile phones, electricity, and televisions in their home; Grade 4 students were also more likely to report bringing books home from school; there were no clear trends across languages for the availability of computers or cars or trucks at home, nor were there consistent trends in the proportion of students reporting that they saw people reading at home.[1]

Languages

Both Ganda and Runyankore-Rukiga are Bantu languages that use Roman script and have predominately open syllables (i.e., syllables typically end in a vowel), agglutinating structures (one word may represent a phrase or sentence with a subject, verb, and object), and consistent letter–sound correspondence. Ganda (or Luganda) is used in the capital, Kampala, and is spoken by 5.5 million people as a first language and another 1 million as a second language (Eberhard et al., 2021). Runyankore-Rukiga (also known as Kiga or Nkore) is spoken by roughly 2.4 million people in western Uganda (Simons & Fennig 2017). Lango (or Leblango) is a Nilotic language that also uses Roman script and has a mix of open and closed syllables. Over 2 million people, mostly in northern Uganda, speak Lango (Eberhard et al., 2021).

Measures

All students were assessed using the version of the EGRA that had been adapted into their language. The subtasks examined in the current analysis include the following:

-

Listening comprehension. The listening comprehension subtask uses both explicit and inferential questions to measure students’ understanding of an orally read story. It is untimed and does not have a discontinuation rule.

-

Letter-sound knowledge. The letter-sound knowledge subtask measures knowledge of letter–sound correspondences. Letters of the alphabet are presented multiple times to total 100 letters presented in random order in both upper and lower case. It is timed to 60 seconds and is discontinued if students do not correctly produce any sounds from the first line (i.e., 10 letters).

-

Phonological awareness. The phonological awareness subtask measures the ability to segment a word into individual phonemes (Lango) or syllables (Ganda, Runyankore-Rukiga). This subtask is oral and has 10 items. It is discontinued if no points are earned in the first five items.

-

Nonword reading. The nonword reading subtask measures the ability to decode individual words that are not real words but that follow common orthographic structure for that language. Fifty nonwords are presented. The subtask is timed to 60 seconds and is discontinued if a student fails to read any of the first five words correctly.

-

Oral reading fluency. The oral reading fluency subtask measures the ability to read aloud a brief passage with a familiar story structure (Ganda, 43 words; Lango, 49 words; Runyankore-Rukiga, 49 words). It is scored for accuracy and rate of reading, timed to 60 seconds, and discontinued if students do not read any words in the first line (i.e., about 10 words) correctly. These students read the same Grade 2 passage at both time points.

-

Reading comprehension. Reading comprehension is measured by asking explicit and inferential questions to determine students’ understanding of what they read.

In each of the three languages, the same version of the EGRA was used at both time points (2013 and 2016), and the instruments were initially adapted, field tested, and piloted before the 2013 data collection. In 2016, the individual items (i.e., letters and words) in each row were rearranged. The same reading passage and questions were used at both time points.

Our reliability estimates showed strong internal consistency across subtasks. (Internal consistency was estimated for the nonword, oral reading fluency, and reading comprehension subtasks. Because only zero scores are included for listening comprehension, letter sounds, and phonological awareness, these subtasks were not included in the reliability estimates.) Cronbach’s alpha for Luganda was 0.93 in Grade 1 and 0.95 in Grade 4; for Lango, it was 0.87 in Grade 1 and 0.89 in Grade 4; and for Runyankore-Rukiga, it was 0.92 in Grade 1 and 0.95 in Grade 4.

Data Collection

Following a multiday training, assessors were selected based on their consistency with their peers in administration and on other qualities such as demeanor with young children. Data were collected on electronic tablets using Tangerine software (http://www.tangerinecentral.org).

Findings

Stern et al. (2018) developed this learner-profile methodology for two reasons: (1) to have a more nuanced way to show reading progress and improvement when many students are not yet reading fluently and (2) to help to identify the reading skills of the sampled children to guide their subsequent instruction.

Research Question 1

For our first research question, concerning the relevance of the learner-profile methodology to distinguish group membership at a single time point in two Bantu and one Nilotic language, we learned that the methodology was appropriate but with one distinct difference from the Indonesian context.

Students in the Fluent category—reading accurately and automatically, about a word per second, but with low comprehension—essentially did not exist in these three Uganda data sets. In each of the three languages, less than one percent of students were Fluent (0.22 percent Ganda, 0.34 percent Lango, 0.1 percent Runyankore-Rukiga). We found that in all three languages, the reading rates (correct words per minute, or cwpm) had an asymmetrical distribution that skewed to the right. Therefore, we dropped the Fluent category and proceeded with just four profiles: Nonreader (0 cwpm), Beginner (1–20 cwpm), Instructional (at least 21 cwpm but unable to finish the entire passage), and Next-Level Ready (read entire passage with at least 80 percent comprehension).

The remaining four profiles had membership, and the results on the other administered subskills were related to the four profiles. Table 2 presents the results by EGRA subtask. We ran regression models (not presented here) with gender, age, and grade as control variables and found that the relationships across profiles remained consistent and loaded together as expected. Controlling for these three variables (gender, age, grade) that influence reading outcomes (Crone & Whitehurst, 1999; Logan & Johnston, 2010) demonstrated that assignment to one of the four profiles was related to the results on the oral reading fluency subtask, independent of these student characteristics.

The Nonreader category represents students who did not have any reading ability (i.e., could not read a single word from the passage correctly). Based on their categorization, we would expect the skills that precede word reading would also be low, which is exactly what we found. Nonreaders had the largest proportion of students who could not accurately answer questions about a story read to them during the listening comprehension subtask. Between 30 and 47 percent did not know any of the letter sounds that are needed for initial word reading. Their ability to segment sounds or syllables also was low. Yet, interestingly, in Lango, the Nonreaders were not lowest in this emergent skill. We explore this finding further in the Discussion section.

The Beginner category represents students who were just beginning to apply their knowledge of the way letters represent sounds to read words. They were progressing because they had higher listening comprehension, letter-sound knowledge, and segmentation skills compared with Nonreaders. Beginners had emerging decoding skills, as evident by their accuracy on the nonword reading subtask, which ranged from 22 to 57 percent across languages and was 22 to 30 percentage points lower than the next category (Instructional). These students needed to practice decoding to increase word-reading accuracy.

Compared with Beginners, students in the Instructional category identified nonwords with more accuracy, and their accuracy with real words was 27 to 48 percentage points higher. Their slower reading rate, demonstrated by their not completing the passage, indicates that these students needed practice in rereading text to improve automaticity (i.e., rate).

The Next-Level Ready category includes students who were ready for more complex text than that of the EGRA passage they read. Their results on nonword reading showed decoding abilities that were 9 to 17 percentage points higher, and they were both faster and more accurate (5 to 6 percentage points higher) at identifying real words compared with students in the Instructional category. In addition, their comprehension abilities for the passage were higher. They could read and understand this level of text and would have benefited from exploring text at the next higher level.

Research Question 2

Our second line of inquiry involved extending the learner-profile methodology to see if it could show profile changes at two time points from Grade 1 to Grade 4. As the results presented in Table 3 indicate, we found that it could.

Specifically, we saw distributional shifts in all categories toward student learning after 3 years of instruction. In all three languages, fewer students were classified as Nonreaders at the second time point. The percentage of students in the Next-Level Ready category expanded from virtually no students in Grade 1 to between 3 and 13 percent of students in Grade 4. Between 64 and 76 percent of students fell in the two middle categories, Beginner and Instructional, in Grade 4, whereas they made up between 2 and 12 percent of Grade 1 students.

Research Question 3

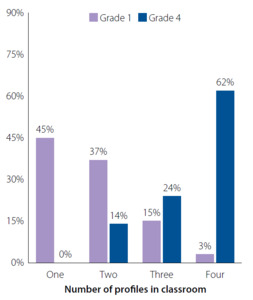

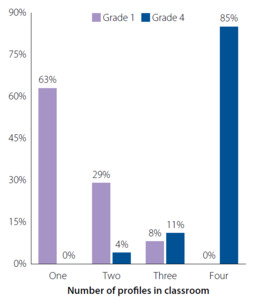

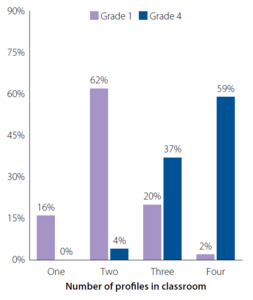

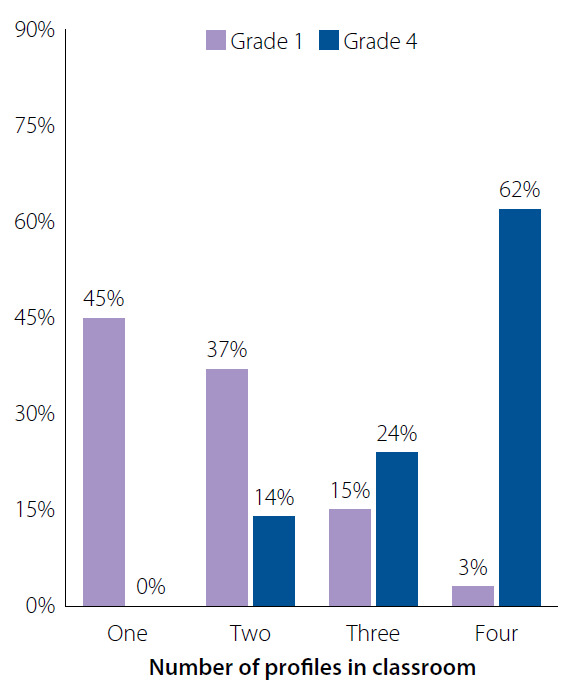

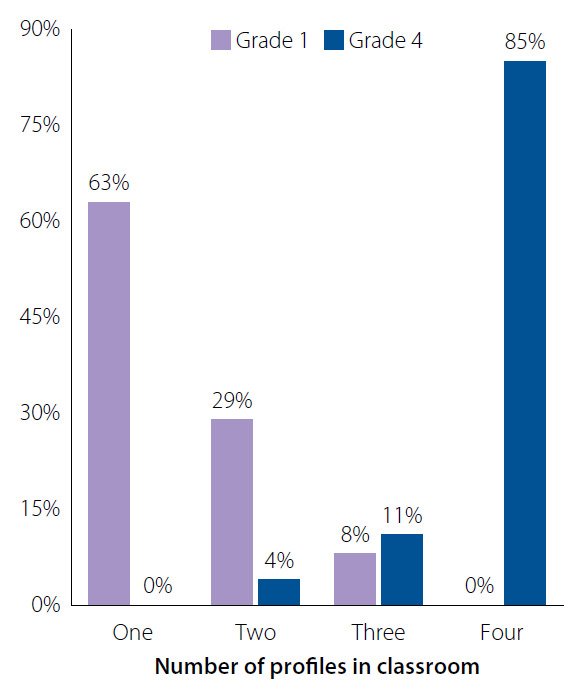

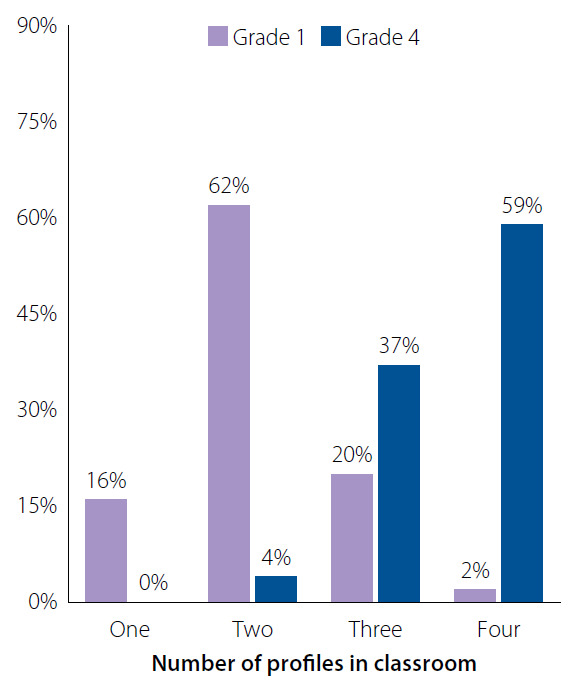

The third line of inquiry focused on how the distribution of learner profiles in classrooms changed from Grade 1 to Grade 4. Figures 1, 2, and 3 show, by language, that the number of different profiles represented in classrooms expanded during these 3 years. Specifically, 78 percent or more of Grade 1 classrooms had one or two profiles, and the highest proportion of Grade 1 classrooms with four profiles was 3 percent (Ganda-speaking classrooms). This trend flipped in Grade 4, when 59 to 85 percent of all classrooms had four profiles and none had just one profile. These findings are evidence that teachers need to differentiate instruction across a greater range of skills as students progress through primary school.

Discussion

This study used archival data from students learning to read in one of three languages in Uganda. Our goal was to see if we could apply a profiling methodology previously used with students reading in Bahasa Indonesia that categorized them based on EGRA results to show change in reading growth and to guide instruction. Overall, we found high levels of consistency across the profiles. Thus, these profiles show promise for universal applicability (or universal adaptability). Further, they could be used to distinguish consistent and common language for interpreting EGRA results and defining implications in terms of instructional needs and policy supports. There are, however, several additional findings of note.

First, we found that the Ugandan students fell into four of the five profiles identified with the Indonesian students. The Fluent category—students who read quickly with minimal understanding—appeared in the Indonesia sample but was not observed in the Uganda samples. One reason for this discrepancy may be the minimal number of students who read approximately one word per second in each of the three Ugandan languages. Less than 2 percent of the students in all three languages (1.5 percent in Leblango, 1.2 percent in Luganda, and 0.2 percent in Runyankore-Rukiga) read at this rate, the threshold for assignment to the Fluent and Next-Level Ready profiles. The small proportion of students who read at this rate limited the amount of variation in comprehension that could be observed.

Another reason why virtually no Ugandan students fell into the Fluent category may be related to differences in the language contexts. Roughly half of the students who were learning to read in Bahasa Indonesia were native speakers of that language. In contrast, among all the Ugandan students who were learning to read, one of the three languages was either their first language or the lingua franca of their respective communities. This finding suggests that more of the Ugandan students were learning to read in a language that was familiar to them, and that they had been acquiring the oral language skills that would facilitate their learning to read in that language. We note that the approximately 2 percent of students who read more than one word per second also had reading comprehension scores that averaged between 87.7 percent and 95.4 percent (Table 1), suggesting that they had the oral language skills to understand the passage they read fluently. More information on the Ugandan students’ oral language skills in the languages in which they were learning to read would help to explain this result (and could help others to think through contextual adaptations to these profiles in different contexts).

We observed a second interesting finding in the Lango students’ data. Among these students, the Nonreaders were not the lowest performers at segmenting words into sounds (i.e., phonological awareness); rather, the Beginners had more zero scores. On the other EGRA subtasks, Lango Beginners had higher skill levels than Nonreaders, as expected, so it is unusual that they would have had lower segmenting skills. This result could be based on some teachers focusing on this skill more than others. This assertion is speculative, however, and would require further investigation to answer.

Third, students’ accuracy of word reading provides useful information on the difference between students categorized as Beginners and Instructional and the appropriateness of the text to help them show growth. Differences in accuracy of decoding unfamiliar words (i.e., nonwords) ranged from 21 to 30 percent across the three languages. This calculation tells us that students in the Instructional category could more strongly apply the alphabetic principle to read nonwords. Furthermore, differences in accuracy of real words attempted in the passage were 27 to 48 percent. Based on the accuracy results achieved by Instructional readers (89 to 95 percent), the text was appropriate for their level (Mesmer, 2008). These results also guide us to say that the level of text used in the passage reading was too advanced for the students categorized as Beginners, whose accuracy ranged from 41 to 68 percent. Beginners should interact with text that they can read with roughly 89 to 95 percent accuracy.

Fourth, this study illustrates the potential efficacy of using learner profiles to describe changes in students’ reading subskills, and, ultimately, reading instruction effectiveness. When reading growth is described by the average number of words read correctly in a minute and understanding of the passage, it overlooks students’ growth on the reading subskills they need to become fluent readers overall. In these three data sets, between 2.5 percent and 12.9 percent of the Grade 4 students could read the passage with fluency and comprehension. Although these low percentages were not an ideal result, when we disaggregated by learner profiles, we found measurable progress in the students’ reading skill development.

For example, focusing just on Runyankore-Rukiga (see Figure 4), we found that the percentage of students who were Next-Level Ready—i.e., read the passage with fluency and comprehension—increased by only 2 percentage points after 3 years; by Grade 4, only 2.5 percent of students were Next-Level Ready. Looking at the percentage of students in the other learner profiles, however, two-thirds of the students shifted from the lowest category, Nonreader, into the Beginner or Instructional profiles. Thus, although the students were not reaching the milestone of Next-Level Ready performance, their reading abilities had grown. By allowing us to compare the distributions of learner profiles rather than looking only at the milestone achievement level, the results give us far more information about the success of an intervention or project. While there is no reason to believe that these trends would be unique to the Ugandan context, it is recommended that researchers continue to explore these trends in other settings to determine their broad usefulness and applicability.

Finally, this study explored the proportions of learner profiles in classrooms. We found that most of the Grade 1 classrooms had only the two profiles that are adjacent in the early stages of reading—Nonreaders and Beginners. The results suggest that in Grade 1 classrooms with mostly homogenous profiles, it would be appropriate to target many of the instructional activities to the entire class. In this context, instruction would focus on students developing an awareness of the alphabetic principle that print represents speech and can be written. It would include activities such as name writing, labeling objects, learning the concept of a word within text, and learning about letters. Systematic and explicit instruction on combining letters to read and spell words would be appropriate. Listening and responding to stories read aloud would also be appropriate, to help students learn about text structure and how readers interact with text. Oral language activities that develop phonological awareness and word meaning would also help students advance to the next profile.

By Grade 4, most (59 to 85 percent) of the classrooms had four profiles. The skills represented by these four profiles ranged from those expected at the onset of Grade 1, such as not knowing any words, to reading fluently with comprehension and being ready for more complex text. Heterogeneous profiles within classrooms are a well-documented phenomenon and are consistent with what we know about how reading skills develop; that is, some children progress faster than others (Lervåg & Hulme, 2010; Vellutino et al., 1996). Consequently, Grade 4 instruction must respond to the heterogeneous profiles through differentiated instruction or strategic and limited use of homogeneous groupings based on learner profiles. Additional strategies may include team teaching or inviting volunteers to the classroom to assist the teacher. The precise model selected to support the heterogonous classroom is secondary to recognizing that all students need instruction at their current ability level. Without learner-profile-appropriate instruction, students will not progress efficiently.

Limitations

This study explores the efficacy of using learner profiles to measure reading growth and inform instruction. It has two limitations, however. First, our findings would have been enhanced if we had been able to analyze longitudinal data as opposed to cohort data. Students were not tracked longitudinally by the program that collected the data; a data set resulting from a more complex longitudinal design would have given us more confidence in the profile results.

Furthermore, the current study was limited by the lack of information about students’ facility with the language of the assessment. In particular, more information about the students learning to read Ganda would have been helpful. The relatively high percentage of zero scores (17.6 percent) on listening comprehension among Grade 1 Nonreaders in classrooms where Ganda was the language of instruction suggests that fewer of these students had strong oral language skills in the language of instruction and assessment compared with students learning in the other two languages.

Conclusions

We recommend that EGRA results continue to highlight students’ performance on passage reading, because it is an authentic and understandable skill that we expect students to acquire in the lower primary grades. We also recommend, however, that educators and program evaluators using the EGRA examine the number of students who shift from one learner profile to another over time. This approach also would shift the interpretation of results from student deficits on one subtask toward recognizing their literacy growth on multiple subtasks. Furthermore, we believe that more thoroughly measuring students’ oral language skills would generate useful information on students’ learning needs.

Direct student assessments are administered to provide immediate evidence for a student’s progress in learning an academic skill, and the EGRA does produce information about reading progress. Yet, presenting the results around just one skill, passage reading, bypasses an opportunity to describe students’ reading progress in the skills that precede it. We used a methodology that uses the passage reading results to establish categories that connect to particular learner profiles—and with those profiles, in turn, having instructional implications. In other words, instructional needs are supported by the results on the other EGRA subtasks. We hope that others will build upon this work to examine the appropriateness and value of these learning profiles in other contexts. Doing so will improve our ability to more consistently discuss and understand the implications of EGRA results across settings.

The efficacy of this learner profile methodology is to help to guide instruction so that students are not bored by content that is too easy for them or frustrated by content that is too difficult. We doubt that people will argue with the idea that assessments should be used to guide instruction and that instruction should meet the needs of the students. The next steps, however, should focus on determining how best to address the different needs of students within classrooms.

Acknowledgments

The authors acknowledge the many children around the world who are learning to read and write. We hope studies like the one herein contribute to them choosing to read for pleasure, to explore their curiosities, and to document their findings.

These trends represent descriptive changes, but interpreting them is complex. For example, we expected to see increases in mobile phones, electricity, and televisions from 2013 to 2016 (aligning with increases broader access over that time period). Furthermore, Grade 4 students are expected to take books home more often than Grade 1 students. Finally, there are questions about the reliability of students’ self-reporting of home assets and socioeconomic status variables, with likely higher reliability among older students.