Introduction

In the past two decades, considerable progress has been made in research around mental health service provision for underserved Latinx people in the United States—both immigrants and those born in the United States (Castro et al., 2010). Despite improvements in mental health access, Latinx people continue to seek mental health services less often than other racial and ethnic groups (Chang & Biegel, 2018; Derr, 2016; Fortuna et al., 2016). Latinx people, particularly first-generation immigrants, face significant barriers to treatment utilization, including lack of health insurance, language challenges, fear of discrimination, concerns about citizenship status, and stigma (Fortuna et al., 2016). As such there is an emphasis in Latinx communities on increasing feasibility of identifying mental health problems in primary care and specialized mental health settings where people feel more comfortable disclosing information and more open to receiving treatment (Escovar et al., 2018).

It is well documented that underserved groups of people experienced more risk during the COVID-19 pandemic (Bambra et al., 2020). Social distancing and shelter-in-place ordinances enacted across the globe in response to the COVID-19 pandemic created a major hurdle in access to care. Many behavioral health providers have been quick to embrace telehealth solutions to continue caring for their clients. Telehealth service provision via telephone and video were the main mental health service modality available during the pandemic (Saavedra et al., 2024; Uscher-Pines et al., 2021). Consequently, there has been a synchronized pivot to conducting treatment monitoring and other assessments via virtual delivery methods (video and audio-only) as well via in-person delivery. Post COVID-19 pandemic, this need is still present for people living in rural areas (Patel et al., 2025).

One of the most widely used instruments to assess self-reported depression, anxiety, and stress in both English and Spanish is the Depression, Anxiety, and Stress Scales (DASS; Gomez, 2016; P. F. Lovibond, 1998; S. H. Lovibond & Lovibond, 1995). The DASS is commonly used in primary care and community-based clinical settings, as well as research settings. The DASS is unique in that it allows for the assessment of depression, anxiety, and stress in one instrument. Moreover, the DASS is not tied to a particular edition of the Diagnostic and Statistical Manual (DSM; American Psychiatric Association, 2013). The original DASS, which consisted of 42 items, was shortened to the DASS-21, which has undergone considerable psychometric evaluation (see Clara et al., 2001; Daza et al., 2002; Henry & Crawford, 2005; Norton, 2007).

The need for brief instruments that can quickly and accurately assess symptoms of depression, anxiety, and stress to inform clinical decision-making is critical in most settings but especially in primary care and community-based organizations that provide mental health treatment, including via telehealth. In such settings, providers tend to be generalists with limited time for evaluation and assessment, yet a large amount of information needs to be gathered to establish a clinical diagnosis. Lengthy assessment instruments used to compliment the clinical diagnosis contribute to patient fatigue, which can reduce the validity of the assessment data. Given that Federally Qualified Health Centers, community-based organizations, and primary care entities often work with Latinx individuals, these clinical settings would benefit from a validated short form of an instrument with strong psychometric properties that is available in both English and Spanish to meet their patients’ language preferences (Saavedra et al., 2024; Sanchez et al., 2024). This is especially true in the context of telehealth service delivery.

The aim of this study was to examine the use of moderated nonlinear factor analysis (MNLFA; Bauer, 2017; Bauer & Hussong, 2009) to develop a shorter version of the DASS-21 using data from a community-based treatment clinic primarily serving Latinx patients presenting with depression, anxiety, and stress. The MNLFA model is a flexible psychometric model that, unlike item response theory (IRT) models (e.g., Edelen & Reeve, 2007; Samejima, 1969, 2016), accounts for differential item functioning (DIF) in both factor loadings and item thresholds across multiple predictors simultaneously. Providers have emphasized a need for a shorter instrument for continued telehealth (telephone and video) service delivery during the pandemic but also after the pandemic where segments of the population will continue to have limited access to mental health services (Saavedra et al., 2024; Uscher-Pines et al., 2021).

The DASS has been translated to more than 20 languages, but most of the psychometric work with translated versions of the DASS has been conducted using classical test theory (CTT) approaches to score the DASS even when factor analysis methods have been used to assess item properties (e.g., Daza et al., 2002; Ruiz et al., 2017). We used MNLFA in this study to (a) assess differences in the item properties across language, gender, age and primary diagnosis simultaneously; (b) estimate scale scores that account for differential weighting of symptoms (Bauer, 2017; Curran et al., 2008); and (c) use MNLFA item information functions to develop a short screener that maximally distinguish clinical from nonclinical levels of negative affectivity (NA) among Hispanic mental health treatment-seekers. Further, this analysis attempts to balance (a) the incorporation of at least one depression, anxiety, and stress item that was found to be invariant across DIF factors and/or (b) one depression, anxiety, and stress item that had maximum item information at clinical levels of NA (even if the item was noninvariant across language and/or other DIF factors; see Millsap & Kwok, 2004 discussion of partial invariance). Such a short-form DASS instrument could be easily integrated into community clinical settings to improve treatment planning and monitoring for English- and Spanish-speaking patients.

Methods

Participants

The sample consisted of 949 individuals (64 percent female; mean age = 32.65 [12.74]) who presented to a community-based mental health treatment center that provides bilingual and culturally informed behavioral health treatment for underserved Spanish-speaking individuals and families. This organization serves more than 2,400 patients per year, the majority of whom are first- and second-generation immigrants. Most of the patient population falls below 200 percent of the federal poverty level. All assessors and therapists are fully bilingual in Spanish and English, and all instruments are available in both languages. Patients are given the option to complete assessment and treatment in their preferred language (Spanish or English). The total sample of 949 was divided into a calibration sample and a validation sample using a random 50/50 split based on the SAS random (binary) number generator function RANBIN. Table 1 presents demographic and diagnostic information, subset by language and sample type (calibration or validation). In a similar albeit independent subsample (n = 161), we also examined test-retest reliability of responses from the 6 items from the fully administered DASS-21 and the items from the shortened version (DASS-6).

Measures

Depression, Anxiety, and Stress Scale-21

The DASS-21 is used to assess past-week depression, anxiety, and stress along three 7-item self-report scales (S. H. Lovibond & Lovibond, 1995). Items are rated using a 4-point Likert-type scale (0 = “did not apply to me at all” to 3 = “applied to me very much or most of the time”). The English version of the DASS-21 has good psychometric properties (e.g., internal consistency, convergent and discriminant validity) based on CTT methods (McDonald, 1999; Scholten et al., 2017), as does the Spanish translation of the DASS-21 (Bados et al., 2005). The properties using IRT methods (e.g., local reliability; Chiesi et al., 2017), however, are not yet known.

Psychiatric Diagnosis

Primary diagnoses of anxiety, depression, or posttraumatic stress disorder (PTSD) were based on a diagnostic interview with a licensed service provider (psychiatrist, psychologist, counselor). Although this interview is not a standard semi-structured as in clinical research, clinicians have access to modules from semi-structured interviews. Table 1 presents DSM-5 diagnoses for the sample. After the clinical assessment, participants completed the DASS-21 in addition to other demographic information as part of their intake.

Inputs from Community Clinical Experts

As part of this study, we had regular inputs from community mental health providers with considerable experience implementing evidence-based and evidence-informed approaches while working in mental health settings for broad mental health problems and for heterogeneous groups of Latinx people. This includes expertise delivering services with monolingual and bilingual individuals with various language preferences. Members included leadership and clinical staff from El Futuro (one psychiatrist, two social workers, and three mental health counselors—all licensed in their respective fields).

Importantly, these community mental health providers approached us with a specific challenge: they needed a tool to maintain the utility and reliability of the DASS-21 while also meeting the unique demands of telehealth settings, particularly in the rural areas across the state of North Carolina. They emphasized the growing need for evidence-based approaches to monitoring mental health and integrating measurement-based care into their evidence-informed, evaluation-friendly practice. However, the DASS-21, in its current form was perceived as too lengthy and burdensome for consistent use in these settings. This feedback highlighted the importance of balancing psychometric rigor and practical feasibility for providers invested in quality evidence-informed care in real-world, resource-constrained contexts.

Procedure

Assessments were conducted at one of the El Futuro sites, a community-based mental health treatment center that serves patients from rural and urban backgrounds in North Carolina. Data for the development of the DASS-6 were collected between 2017 and 2019. Patients were assessed by a trained and licensed clinician (MD, PhD) or therapist (PhD-level licensed psychologist, master’s-level licensed counselor or clinical social worker) with experience working with Latinx individuals seeking mental health services and trained in the assessment of DSM-5 disorders, including differential diagnosis between anxiety, depression, PTSD, adjustment and stress disorders, and other related disorders (APA, 2013). To ensure reliability using a post Covid-19 pandemic independent sample, 161 individuals were administered the DASS-21 and the DASS-6 for subscale test-retest reliability (interval 5–10 days). These were collected between 2020 and 2023.

Data Analysis Strategy

Tests for Model Fit: Single-Factor and “Total Score” Analog Model

Before fitting of MNLFA models, essential unidimensionality was examined in the calibration sample to see if a single factor generally underlies the DASS-21 items using means and variance-adjusted weighted least squares estimation in Mplus version 8 (Muthén & Muthén, 2017). Within tests of fit of a general single factor, we also fit a more restrictive model where factor loadings/discrimination parameters were constrained to equality to assess whether the psychometric model that underlies DASS-21 total scores fit the data (the IRT analog of a one-parameter logistic model; Andrich, 1978). Given the proliferation of the use of DASS-21 total scores, fitting this model is consistent with recent calls in the quantitative methods literature for explicit testing and justification of the use of total scores by ensuring that the psychometric model that is assumed by the total score fits the data (He et al., 2014; McNeish & Wolf, 2020).

MNLFA: Calibration Sample

First, in the calibration sample, an initial base, single-factor MNLFA model was fit where the 21 DASS symptoms were modeled assuming no DIF across language, gender, age, and primary diagnosis. Mean differences in latent NA were also examined in this base model across these variables as the basis for later examination of differential test functioning (DTF; Jones, 2006; Jones & Gallo, 2002). Next, a series of 21 models were fit for each item/symptom where thresholds and loadings were tested to see if they varied across language, gender, age, and primary diagnosis (i.e., DIF). Thresholds indicate the level of the underlying construct (i.e., latent negative affectivity) at which the probabilities of crossing one category to another on an item (e.g., 0 to 1) are equal. Threshold and/or factor loading DIF values that exceeded Cohen’s d > |.25|, per DIF effect size recommendations from Educational Testing Service (Zwick, 2012), from this series of models were retained for inclusion in an interim model where all DIF parameters were estimated simultaneously. DIF parameters from the interim model that remained above an effect size of d > |.25| were retained for the final scoring model. For each model, including the final MNLFA scoring model, DIF predictors were centered so that the interpretation of the “main” item parameters was at the mean levels of the predictors. The final MNLFA scoring model was then used to generate latent NA scores in the validation sample that considered DIF across all symptoms. This final MNLFA model also assessed mean differences in latent NA (after accounting for DIF) and compared these mean differences across language, gender, age, and primary diagnosis to those from the base model; because all models had the NA scale set to N(0,1), these “difference-in-difference” estimates indicate the level of impact of cumulative DIF on NA scores for estimation of DTF in Cohen’s d (i.e., effect size) metric (Teresi & Jones, 2016).

Further, selection of the items for the newly proposed DASS-6 was based on three criteria. First, item selection was prioritized by the rank-ordering of item information function values at the mean of the NA scale for the most severe diagnostic grouping (i.e., depression diagnosis; see Results section) as recommended by Wyse and Babcock (2016) when maximizing classification accuracy with short forms. Second, priority was given to items that were invariant across all four predictors of DIF. Finally, conditional of the 1st two priorities, two items each were selected from the depression, anxiety, and stress subscales to maximize representation of all three disorders, given the criterion variables were the three diagnoses.

MNLFA: Validation Sample

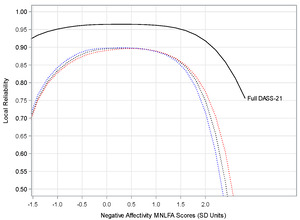

In the validation sample, both the full DASS-21 and the DASS-6 were scored treating the MNLFA item parameter estimates in the calibration sample as fixed item parameters in the validation sample. Local reliability was then assessed for both the full DASS-21 and the DASS-6. Under CTT, the most common measure of reliability (at least as defined here as internal consistency) used in practice is, of course, Cronbach’s α. Under CTT, α presumes that the reliability of a score is constant throughout the range of the construct, which is often unrealistic in practice. In factor analysis/item response theory (FA/IRT), the concept of reliability is “local,” or specific to different levels of the construct (Embretson & Reise, 2000); for health outcomes research, a measure is ideally at its maximum reliability at the level of the construct at which a diagnostic decision is made (Chiesi et al., 2017; Morgan-López et al., 2020). To calculate and graph local reliability (LR), test information function (TIF) values are output, where TIF values are the expected value of the inverse of the error variances for each estimated value of the latent construct score; these values can be requested as output from Mplus. Then, the TIF values are converted to LR values using 1 – (1/(TIF)) for each specific value of the latent construct score.

DASS-6 MNLFA Score Differences by Baseline Diagnosis

As part of the scoring model in the validation sample for generation of DASS-6 scores under MNLFA, paths from diagnostic grouping dummy variables to the latent NA with a random intercept structure (to accommodate clustering among raters) was performed with baseline primary diagnosis groupings (depression, anxiety, PTSD, no diagnosis) as the grouping variable. This analysis to test the significance of the differences between groups DASS-6 severity scores generated under MNLFA (e.g., a de facto validity check).

Clinical Validation Metrics

To assess the clinical utility of the MNLFA score estimates for the DASS-6, positive and negative predictive values (PPV, NPV) were calculated at specific cut points on the MNLFA score, with the corresponding range of the DASS-6 total score (which, for the 6 items could range from 0 to 18) for assessing the approximate correspondence of the total symptom score to the MNLFA score.

Test-Retest Reliability

After identification of the DASS-6 items, we collected 1-week test-retest reliability data on an independent sample of participants. The DASS-21 was administered the first week and the DASS-6 items on their return visit 1-week later (range: 5–10 days).

Results

Preliminary Tests of Model Fit

The conventional one-factor model for DASS-21 items fit the data well, with comparative fit index = 0.97; root mean square error of approximation = 0.054, 95 percent confidence interval [.051, 0.057]. A more restrictive model with equality constraints on factor loadings fit comparatively worse against the conventional one-factor model (Δχ2(20) = 679.75; P < 0.001). Thus, a psychometric model that assumes that the DASS-21 items have equal weight (i.e., total scores) is likely to produce significant bias in scale scoring.

DASS-21 Calibration Sample MNLFA

Overall descriptive statistics across DASS-21 language groups are shown in Table 1 which includes information about age, gender, and clinical diagnosis. In the calibration sample, the Spanish sample was significantly older (b = 13.69 [3.49]; P < 0.001), had higher proportions of women (χ2[1] = 6.54; P < 0.001), and had differences in the diagnostic makeup (χ2[4] = 10.51; P = 0.03). The English sample was most predominant on depression and the Spanish sample was most prominent on stress. The final model MNLFA primary item parameters are shown in Table 2 shows the MNLFA primary item parameters, and Table 3 shows the final model MNLFA factor loading and threshold DIF parameters (that exceeded d > |.25|). As Table 3 shows, 16 of the 21 items were invariant across language (5 showed threshold [i.e., uniform] DIF across language, and none showed factor loading DIF), while 9 items were fully invariant across all DIF factors. The final DASS-21 MNLFA scoring model, with DIF incorporated across all other items, fit significantly better than the base model χ2(16) = 267.50; P < 0.0001.

In the final MNLFA model, Spanish speakers scored higher on NA than English speakers (b = 0.451 [0.115]; t = 3.929; P < 0.001). Note that, because the latent variable variance was set to 1 for NA, mean differences are already in Cohen’s d/standardized mean difference units. Women (d = 0.42, P < 0.001) and patients with a primary diagnosis of depression (d = 0.389, P = 0.001) had both statistically significant and meaningful effect size differences compared with men and the other diagnosis grouping, respectively. Patients with a primary diagnosis of anxiety (d = 0.37, P = 0.058) showed nonsignificant but meaningful elevations on NA, whereas patients with primary PTSD did not show differences (d = 0.12, P = 0.31). Assessment of DTF (Teresi & Jones, 2016) was conducted to examine the practical impact of collective DIF on scale score estimates. This was done by comparing the effect sizes for differences in latent NA across language/age/gender/diagnosis from the final model against the corresponding effect sizes from the base model with no DIF. For example, the language effect size on latent NA for the base model (d = 0.461) versus the language effect size from the final model (d = 0.451) show a “difference-in-difference” effect size (ES) that was negligible (Δd = |.01|). All other similar Δd values were less than |.042|, suggesting little-to-no practical impact on NA scale scores of modeled item-level DIF on DTF.

Item information curves (IICs) were examined to select the optimal items for the proposed DASS-6 in this order of priority: (a) rank ordering of the IIC values at a “clinical cut point” (e.g., the midpoint of the distribution of latent NA for patients with depression, +.39 SDs above the mean on latent NA); (b) invariance across all DIF factors (if possible); (c) invariance across language (even if DIF by other predictors is present); (d) representation across DASS-21 subdimensions (despite the unidimensional fit noted previously); and (e) preference for the items based of provider input. Table 4 shows the selected items (with IICs in Figure 1) and the joint criteria by which the DASS-6 items were selected: Difficulty breathing (item 4; anxiety), Close to panic (item 15; anxiety), Nothing to look forward to (item 10; depression), Unable to become enthusiastic (item 16; depression), Nervous energy (item 8; stress), Difficulty relaxing (item 12; stress). Note that two items that may have reflected superior item properties (Feeling blue, Aware of increased heart rate) were not included. Feeling blue (item 13; depression), despite having the second largest IIC overall, was not selected because of DIF by language; this likely reflects the conceptual differences in the English colloquialism “feeling blue” not having a direct analog in Spanish. Providers for the study remarked that Difficulty breathing might more clearly reflect a physiological symptom that is clearly associated with distress than might an increase in heart rate (item 19; anxiety), which could be due to emotions such as anger or excitement.

Validation Sample: Scoring and Local Reliability

In the validation sample, both the full DASS-21 and DASS-6 were scored for the English and Spanish versions using the item parameter estimates from either the full 21-item set or the selected set of 6 items based on parameter estimates in Tables 2 and 3. Local reliability (overall and conditional on predictors) was then assessed for scale scores estimated from the full DASS-21 and the DASS-6 based on conversion of the test information function values (Chiesi et al., 2017). Figure 2 shows the local reliabilities for the DASS-21 (solid line) and the proposed DASS-6 for English, Spanish, and overall (dashed lines). Local reliability values for the DASS-21 MNLFA scores remained above 0.92 throughout the range of latent NA, and these reliability values did not vary across language, gender, age, or diagnosis. The DASS-6 scale scores overall and across language (no DIF was observed in the six selected items other than threshold DIF by language for “Nothing to Look Forward To”) remained above 0.85 reliability throughout most of the practical range of latent negative affectivity, with a peak reliability of 0.90 at the +.39 standard deviation (SD) clinical cut point.

Random Effects ANOVA: DASS-6 MNLFA Score Differences

An ANOVA model with a random intercept structure was performed with baseline diagnosis groupings (depression only [D], anxiety only [A], PTSD only [P], no depression, anxiety or PTSD [no DAP]) as the grouping variable; the no DAP diagnosis group was used as the reference group. The model accounted for a significant amount of variability in DASS-6 MNLFA scores in the validation sample (F [3, 488] = 11.21; P < 0.0001; r2 = 0.064). The depression (d = 0.85; P <0.001), anxiety (d = 0.99; P < 0.001), and PTSD (d = 0.55; P = 0.018) diagnostic groups showed significant and meaningful differences in DASS-6 NA scale scores compared with the no DAP group. Further, none of these effect size differences varied by more than |.02| in a comparable random effects ANOVA for MNLFA scale scores from the full DASS-21 in the validation sample.

Clinical Validation Metrics

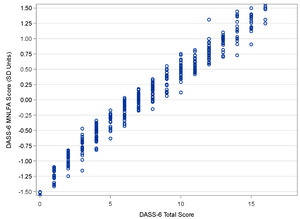

Table 5 presents clinical validation metrics across a selected range of NA. At 0.5 SDs) above the mean DASS-6 MNLFA scale score (close to the 0.39 SDs used for IIC selection), PPVs (e.g., the proportion of patients with a depression diagnosis that would have been detected as such with the DASS-6) ranged from 0.88 to 0.95 across the three disorders, while NPVs (e.g., the proportion of patients without a depression diagnosis that would have depression ruled out with the DASS-6) ranged from 0.53 to 0.64. The DASS-6 MNLFA cut score roughly translates to a DASS-6 total score of 10, with some patients reaching the clinical range with scores of 8 (depending on the combination of symptoms); because of the differences in item weighting via factor loading variation across items, the same total score can lead to a different MNLFA score estimate and vice versa (Morgan-López et al., 2024; Saavedra et al., 2021, 2022). The correlation between the DASS-6 total scores and MNLFA scores (r = 0.98) with a scatterplot shown in Figure 3. Selection of a DASS-6 cut score with a high PPV and low-to-moderate NPV reflects a clinical context where false negatives are more acceptable than false positives, such as patients who may be in subthreshold clinical distress that could still benefit from treatment (Trevethan, 2017).

Independent Sample Test-Retest Reliability

We also included an independent sample of patients (n = 161). For these individuals, the Cronbach’s alpha coefficient was 0.93 for the DASS-6; it was 0.88 for depression, 0.88 for anxiety, and 0.89 for stress, indicating the acceptable internal consistency of the scale. In addition, the test-retest reliability of the DASS-21 and DASS-6 was in the good range (0.89) and was acceptable, and the ICC of all domains was adequate, ranging from 0.75 to 0.86.

Discussion

The systematic and precise measurement of symptoms before, during, and after treatment is an important tenet in evidence-based practice (Joiner et al., 2005). This is routinely challenging for providers in real-life settings where there is limited time to gather needed information. The development of reliable brief instruments that allow providers and clients to maximize their in-session time while still capturing psychometrically sound and clinically useful information is critical. Additionally, for services provided to the US Latinx community, having an instrument that assesses depression, anxiety, and stress is particularly important (Chang & Biegel, 2018; Derr, 2016; Stone et al., 2022); this is especially relevant for clinicians serving both English- and Spanish-speaking clients. Having one assessment that can be reliably administered in both languages reduces additional burden placed on clinicians to learn and master multiple new instruments.

In close consultation with community mental health providers, this study used advances in MNLFA to identify common mental health symptoms in a Latinx clinical population of adult patients who present to a community-based outpatient treatment center. The six items that make up the DASS-6 include: Difficulty breathing (DASS-21 item 4); Nervous energy (DASS-21 item 8); Nothing to look forward to (DASS-21 item 10); Difficult to relax (DASS-21 item 12); Close to panic (DASS-21 item 15); and Unable to become enthusiastic (DASS-21 item 16). Results showed that these six items can reliably discriminate anxiety and depression severity levels in both the Spanish and English versions of the abbreviated instrument. This is critical for monitoring in telehealth settings where shorter instruments are easier to implement. This tool aids providers in accurately identifying and monitoring the severity of mental health conditions in a culturally and linguistically competent manner. The DASS-6 can be an especially valuable tool in early identification and prevention planning for individuals who may require more time to develop rapport and for whom there is a higher likelihood for their symptoms to escalate to more severe levels.

We also underscore additional benefits to the DASS-6. The DASS-6 is an efficient tool for measuring the severity of overall NA (i.e., symptoms of depression, anxiety, and stress) while accounting for measurement bias across Spanish and English language usage. An important contribution is the development of the shortened version in both English and Spanish. Although one must take caution when using this screening instrument as a diagnostic tool, for many settings such as telehealth and primary care, this reliable brief form, which requires significantly less time to administer and score, can be used to quickly identify patients who require more assessment and eventually treatment.

Moreover, this instrument can be useful for monitoring treatment progress, especially in settings where time is particularly limited. Initial test-retest reliability and internal consistency of the DASS-6 provide preliminary support for its suitability for ongoing monitoring of symptom severity over time. In telehealth settings, where longitudinal patient engagement can be challenging, the DASS-6 offers a reliable means of tracking treatment progress and outcomes, supporting more personalized and dynamic treatment planning as well as measurement-based care. Further, previous analysis of the DASS-21 showed that, while several items showed differential item functioning across time (which could compromise precision of estimates of individual patient changes), this DIF was not observed among the items selected for the DASS-6 (Morgan-López et al., 2022). Despite these initial findings, additional research will be needed to determine the utility of the DASS-6 in capturing change over time, particularly after an intervention targeting depression, anxiety, and stress symptoms. Future research should also examine whether information gathered by the DASS-6 shows similar group- and individual-level change in response to treatment relative to the DASS-21.

An important strength of this study lies in the collaboration with community mental health providers, who emphasized the need for a brief yet reliable tool that could be integrated with telehealth and in-person settings. Providers working in rural areas identified a gap in tools that could efficiently monitor symptoms while adhering to evidence-informed approaches for monitoring and measurement-based care. Although the DASS-21 offers a shortened alternative to the original DASS, it remained too lengthy to be practical, especially in telehealth contexts, where time is a premium and patient engagement is key. By using established methodological approaches like MNLFA for deriving the DASS-6, a meaningful solution for both clinicians and clients, the instrument ensures utility, reliability, and feasibility in real-world settings. The partnership with clinicians underscores the importance of designing tools that are both psychometrically sound and meet the practical demands of providers working with underserved populations.

Limitations

Results from this study may be specific to Latinx populations presenting to community-based care organizations. Additional research should examine the performance of the DASS-6 as a brief screener with non-Latinx individuals in similar primary care and/or outpatient community-based organizations. Although this does limit generalizability to other populations, there is a need for more precise measurement tools for this heterogeneous and underserved group of people. Nevertheless, additional studies with different populations should be conducted. Also, the DASS-6 (and the DASS-21) measure general anxiety and not specific anxiety disorders. Future research should examine the utility of the DASS-6 in differentiating between specific anxiety disorders and explore whether item severity has a transdiagnostic component for measuring anxiety disorder severity, as these research questions were beyond the scope of the current study. Finally, in this study, assessments were conducted by experienced and licensed clinicians and therapists all with substantial experience with anxiety and depressive disorders, but fidelity was not monitored.

Limitations notwithstanding, the present study provides an approach for reliably developing other abbreviated symptom monitoring instruments that would be beneficial to clinicians. One of the silver linings of the COVID-19 pandemic has been the expansive capacity built around telehealth service provision. Post-pandemic, there will undoubtably be a continued need for telehealth services to reach individuals with limited resources to access care. Telehealth service provision has opened avenues for this work, but more research is needed on assessment and treatment monitoring. This study using MNLFA to reliably identify the most relevant symptoms can help researchers and practitioners to continue to work toward access to quality mental health services in clinical settings.

Overall, the validation of a shortened, bilingual assessment tool for depression, anxiety, and stress represents a significant step forward in making mental health care more accessible and effective for the Latinx community. It aligns with the broader goal of enhancing mental health service delivery through improved assessment and treatment monitoring, particularly in the context of the burgeoning field of telehealth. This work not only benefits clinicians by providing efficient, reliable tools in dual languages, but also supports the wider aim of improving mental health outcomes for underserved populations.

Data Availability Statement

The data supporting the current study are available from the authors upon reasonable request.

Acknowledgments

We would like to thank the providers and clients who gave their time, insights and trust to make this work possible. Your contributions were invaluable in shaping this research and ensuring its relevance to real-world clinical practice.

The work presented in this manuscript was supported by grants from the National Institute of Justice (NIJ grant 2018-ZD-CX-0001, Saavedra, L.M., PI) and funding from the RTI Fellows Program (Saavedra, L. M.; Morgan-López, A.A.).

RTI Press Associate Editor: Marcus Berzofsky